Tokens

Tokens are the basic unit for measuring AI model usage. They directly affect your credit consumption.

What is a token?

A token is a small unit of text that a model processes. Tokens can be:

- Whole words (like "hello")

- Parts of a word (like "mor" and "ning")

- Punctuation marks (like "." or ",")

- Symbols (like "$" or "@")

- Spaces

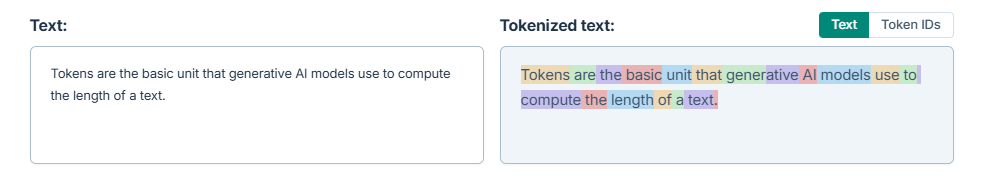

The process of splitting text into tokens is called tokenization. The way text is split into tokens depends on the model's tokenizer, which is specific to each AI provider. To see how text is split into tokens, use our tokenizer tool.

Token counts for languages

Token counts vary by language because the way text is split into tokens differs from one language to another.

-

English: 1 word ≈ 1.3 tokens

-

French/Spanish: 1 word ≈ 1.4 tokens

-

Chinese: 1 word ≈ 1.9 tokens

These figures are based on Beyond Fertility: Analyzing STRR as a Metric for Multilingual Tokenization Evaluation and OpenAI documentation.

These are rough estimates. Actual token counts vary based on the specific words, punctuation, and formatting in your text. To see how text is split into tokens, use our tokenizer tool.

What counts as tokens in GPT for Work?

GPT for Work uses the following types of tokens:

-

Input tokens: The text you send to a model, including your prompt, cell values, global instructions, and any internal instructions used by the add-on. Images can also be used as input and are converted to tokens.

-

Output tokens: The text the model generates in response, such as translations, summaries, or extracted data.

Reasoning models are trained to think before they answer, producing an internal chain of thought before responding to a prompt. They generate two types of output tokens:

-

Reasoning tokens make up the model's internal chain of thought. These tokens are typically not visible in the output from most providers.

-

Completion tokens make up the model's final visible response.

For more information, see How credits are consumed.